Why Banks Must Validate the Identity of AI Agents

As we peer into 2026, the year will likely be recognized as the year that banks not only start to deploy agentic AI at scale but interact with the agents of our customers. In fact, many of these agents will become our customers. In banking however, trust is currency. Every interaction, whether it’s a teller transaction, an instant payment, or an API call depends on knowing exactly who is on both sides of a transaction. In this article, we investigate how to start to create the infrastructure to validate bank created agents and customer agents.

The Background on Agentic Identification

As agentic AI agents begin to handle customer service, payment initiation, fraud detection, and even investment recommendations, the question becomes: How do we know the AI acting on behalf of someone is truly authorized—and accountable?

Without a verified identity, AI agents become a compliance and security blind spot, and the risk/reward profile gets prohibitive. Risks include:

- Unauthorized transactions: A rogue or spoofed AI agent could initiate payments or alter customer records.

- Regulatory violations: KYC/AML frameworks require knowing your customer—not just the human ones, but the digital ones acting on their behalf.

- Fraud amplification: AI systems can scale malicious activity far faster than human bad actors.

- Reputation risk: A “fake” AI agent making a harmful decision could be attributed to your bank.

One tangible action item that banks can start immediately is thinking about their Permissioning standards and structure. Most banks permission humans for a complete application or may have just two or three authorizations levels within a application. However, moving forward, an agent may need only more granular access to one part of the application such as a bank’s human resources or core system.

Banks are encouraged to experiment and think about these items now as they choose any application vendor.

An unverified AI agent is like letting someone walk into your vault wearing a ski mask. Luckily, the problem is solvable, and it is worth contemplating now before your bank gets too far on its agentic journey.

Principles of AI Agent Identity in Banking

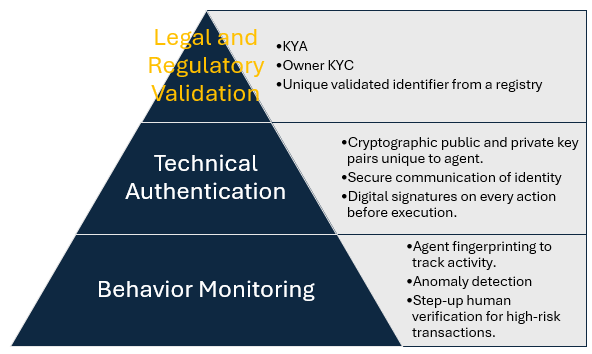

For banks, the gold standard is binding every AI agent to a traceable, accountable entity. No matter if it’s an agent of a customer, an employee, or the bank itself. This requires a layered approach:

- Legal & Regulatory Alignment

- Treat AI agents as “digital representatives” under existing KYC/AML frameworks. Similar to a human, the new requirement is “Know Your Agent,” or KYA. In many ways, AI agents have more in common with humans than with IT resources. AI Agents will grow, evolve, learn, adapt and age out just like humans. Like humans, each agent comes with a risk profile that needs to be tracked and managed accordingly.

- Require agents to be tied to an account holder or institution with validated identity documentation. All bank agents get certified and embedded with the certification number once it is moved into production. A bank not only manages a repository of certified agents, but it also maintains a directory so that other banks, employees and customers can check.

- Technical Authentication

- Assign cryptographic keys or certificates unique to the agent. Once an agent is certified, an agent gets a private and public key to be validated on chain.

- Use secure enclaves or hardware security modules (HSMs) to protect identity credentials.

- Secure communication is a must for passing credentials, so banks need to require mutual transport layer (mTLS) or similar secure protocols for all agent-to-bank and agent-to-agent communication. In this manner, both parties prevent a “man-in-the-middle” attack.

- Behavioral Verification

- Use AI-based “agent fingerprinting” to confirm the same model/instance is interacting over time.

- Track decision patterns and transaction habits to detect anomalies.

Methods to Tie an AI Agent to an Accountable Human or Company

When planning for an enterprise agent platform banks can utilize one or more trusted partners to accomplish the below steps. These potential partners include Okta, Crowdstrike, Accenture, ConductorOne and OwnID.

Here are practical ways banks can work with these partners to ensure every AI agent has a verified identity:

- KYC-Linked Agent Profiles

- Require the human or corporate owner of the agent to complete standard KYC and then attach the credentials to an agent. This means the agent needs to store a verified link between the agent’s cryptographic ID and the owner’s customer profile.

- Digital Signatures on All Actions

- Every action or decision generated by the agent is signed with its private key. This is an evaluating point for banks. At present, we have observed bank agents without traceable ownership and without consistent activity logging. As banks get pitched applications that contain agentic AI, banks should inquire about the use of digital signatures and traceability within their platforms.

- The bank can verify the signature against the registered public key before processing.

- Regulatory-Grade Credentialing

- Issue agents unique identifiers in a registry (similar to LEIs for companies).

- Regulators and counterparties can query this registry to confirm authorization.

- Multi-Factor for AI Agents

- Just as high-risk human actions require MFA, high-risk AI actions can require an additional verification step—either from the owner or another authorized AI/human.

- Agent Lifecycle Management

- Agents should have issuance, renewal, and revocation processes that are easily executed as AI agents may have short lifespans. Because these AI agents are faster to develop and test compared to traditional robotic process automation (RPA), these agentic AI will be used more often and for shorter lifecycles.

- It is likely that many banks will have to start to expand their authorization and Permissioning standards to get more granular. Unlike a human, an agent may be responsible for one small part of a workflow process.

- If an agent is compromised or no longer authorized, its credentials must be instantly revoked.

Finally, banks need to think of not just point or one-off application solutions but they need to take an enterprise approach. A bank will need one or more enterprise-wide platforms in which to host and govern not just homegrown agents, but third-party agents as well. Developing an approach to handle thousands of agents at scale will help future proof the organization.

Putting This into Action

In the coming years, banks will have more AI agents than employees, and they will be just as powerful. In fact, in some aspects, agents will be treated like employees and managed by human resources as much as they are managed as an technology resource. Agentic AI will be a strategic advantage for banks and will help the organization be more productive, be more customer-centric, reduce errors and fraud losses, all the while being a catalyst for innovation.

Without identity validation, they’re a blank check waiting to be forged.

While many community banks have already embarked on the agentic AI journey, few are developing policies to govern AI agents or a platform to ensure KYA.