The Future of Agentic Teams At Your Bank

This month will likely go down as the “Agentic Big Bang.” The artificial intelligence landscape shifted from “chatting” to mainstream “doing,” and if you are running a community bank in the US, the ground beneath your feet just moved. Over the last two weeks, we had four seminal events – Openclaw exploded, Anthropic released Claude 4.6 Opus, OpenAI released GPT-5.3 Codex and Matt Shumer released an article that has become the must read in Silicon Valley and in financial circles (20mm+ views). This has not been an incremental update to agentic AI; this is the arrival of the “Lead Agent” era for agentic teams.

“Something Big Is Happening”

Matt Shumer, the founder of OthersideAI, produced a viral thesis , Something Big Is Happening. Shumer argues that we have moved past the “wow” phase of AI into a period of massive, quiet substitution of human work with AI work. Shumer claims AI has moved beyond simple pattern recognition and now exhibits both true “judgement” and “taste.” That is, AI just isn’t following instructions but making quality judgements on complex, subjective tasks.

While we don’t subscribe to Shumer’s central thesis about the fall of labor and we do not believe that humans and tools are interchangeable, his content is worth a read. Shumer’s postulate that now that AI can create other AI, productivity gains will only get faster is valid. We also support his plea for professionals to stop using free models and keep up with frontier models and we wholeheartedly endorse his tenant that adaptability is now a hallmark of future success.

Regardless of your level of agreement with Shumer’s arguments, he has provided a suitable backdrop in which to frame the current popularity with using teams of agents.

The Background on Agentic Teams

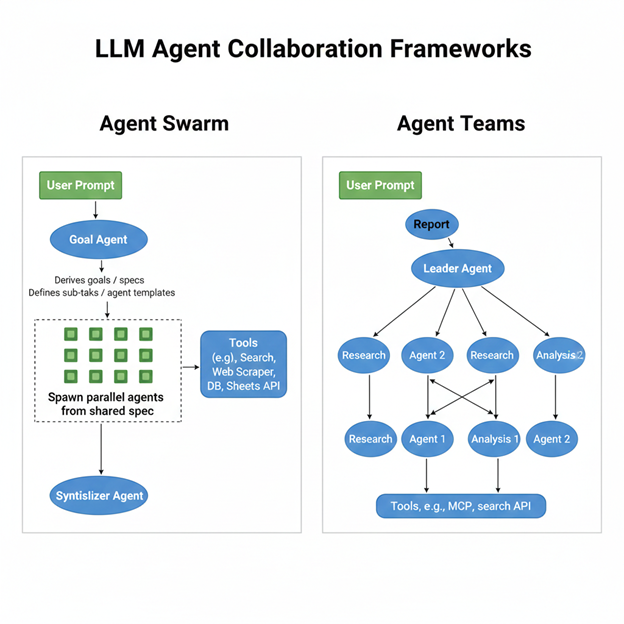

We have written about AI agents several times in the past and even produced a bank executive’s primer on the topic (HERE). Agents can handle a variety of tasks from research to intelligent autonomous workflow such as loan document preparation. In the past, we have talked about utilizing a single agent or multiple agents in a linear architecture. In the last several weeks passing the task, off to a supervisory agent and letting that agent form a team has now become commonplace. In like fashion, utilizing a “swarm” of agents that all conduct a similar task but at scale is also now common.

The Leap Forward

While agentic teams and swarms have been in production for the past two years, this month, they became common thanks to three events. Here is a quick summary of this month’s developments that bankers should be tracking.

- OpenClaw: While this is a security nightmare, the innovation is stark. OpenClaw is a self-hosted agentic forum that runs on your hardware. Agents are talking to each other, teaching themselves and partnering with other agents. They share plug-ins, code, and insight. Data sovereignty now takes centerstage. OpenClaw will usher in a safer, enterprise versions where bank agents will routinely connect network files and APIs to execute tasks to include booking entire events, managing calendars, or triggering local deployments of code, right from your messaging application (such as Slack or Teams).

- Anthropic’s Claude 4.6 & “Computer Use”: Anthropic’s breakthrough allows the model to “see” and interact with a computer screen like a human. It can move cursors and click buttons not only in browsers but in legacy core banking software (FIS, Fiserv, Jack Henry) that lacks modern APIs, acting as the ultimate “glue” for disconnected systems.

- OpenAI’s GPT-5.3 Codex & Operator: This is no longer a coding assistant; it is an orchestrator. The Operator agent is optimized for browser-based tasks, allowing it to navigate complex web portals and perform deep research with minimal human oversight. If you want your agent to open multiple bank accounts, this is now possible.

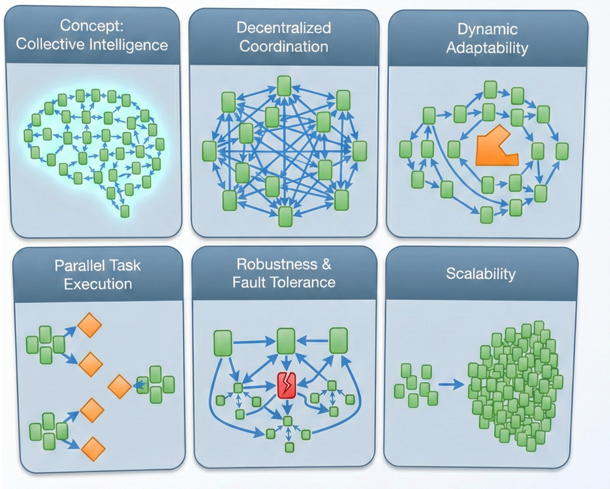

The advantages of using agents teams or swarms is outlined below but include:

- Each agent building on collective intelligence.

- Decentralized coordination of multiple, related tasks.

- The ability to repurpose agents during a task to decrease the time on task.

- Run parallel tasks simultaneously.

- Have built in fault tolerance.

- Achieve scalability.

Why “Self-Creating AI” is the Real Game-Changer

The most startling revelation from the 4.6 Opus and GPT-5.3 Codex launch was that the models are instrumental in their own creation. Models can now successfully debug their own training runs, managing deployment infrastructure, and diagnosing its own results.

This is an evolutionary leap. Historically, software followed a linear path: humans wrote code, tested it, and deployed it. With these new tools, AI is self-creating. We have entered a cycle of recursive self-improvement and bankers need to keep up.

Agent teams can present themselves in many ways. Most bankers are familiar with a single agent, but they can be networked together each with a different task and coordinated as a group. Over this past week, using a supervisory or hierarchical agent team has become popular. In the experimental phase, it is letting agents work together as they see fit in a customized, self-organizing fashion that could change with each task.

For banks, this means:

- Every Banker is a Developer: The software “bottleneck” is evaporating. Bankers no longer need to wait for a vendor’s three-year roadmap or your development team to build a model or workflow. If you can describe it, the agent can build it AND execute it.

- Compressed Lifecycles: Development cycles that used to take months are being compressed into weeks or even days because the AI is optimizing its own “brain” as it works.

The Rise of the “Citizen Developer” for Agentic Teams

These platforms effectively turn every banker into a “citizen developer.” You no longer need a massive DevOps team to build a custom workflow. “Vibe Coding,” or the process of describing a solution in plain English, has become more effective. A Chief Credit Officer can “instruct” a lead agent to build sub-agents specifically designed to monitor and aggregate loan repayment times and deposit balances and then send alerts when a borrower’s risk profile changes.

Bank Governance of the “Lethal Trifecta”

While the opportunity for agents to build other agents offers a potential 20% reduction in net costs at banks, this opportunity comes with risks. Security experts warn of the “lethal trifecta”: AI agents that have access to private data, the ability to communicate externally, and the ability to read untrusted content. If an agent reads a Word document containing a hidden “prompt injection,” it could be tricked into exfiltrating sensitive data.

The best governance frameworks for Agentic AI for banking in 2026 involve combining traditional AI safety standards (NIST AI RMF, ISO 42001, EU AI Act) with specialized operational protocols like KPMG’s Trusted AI, emphasizing human-in-the-loop, strict access controls, and immutable audit trails.

These references help shift the current bank focus on traditional machine learning and generative AI governance to “autonomous” oversight, which is critical when agents are now influencing authorizations, money movement, and legal frameworks.

Developing an agent governance framework revolves around four main pillars:

Human-Centric Orchestration: Ensures agents operate under “human-led, AI-operated” models, where humans define goals and agents execute within strict boundaries.

The “Agent Fabric”: A governed API and agent identity layer that acts as a central checkpoint for all agent actions, enforcing consistent policy and data access controls in addition to validating an agent’s authorization. Every agent must have a unique traceable ID and permission set.

Decision Provenance Logs: Moves beyond simple audit trails to “reasoning chains.” These logs documents not only what an agent did, but the specific reasoning and data inputs used for each autonomous step. This is the new age of agent auditing. These logs include the real-time continuous monitoring of “inter-agent communication” to prevent cascading failures in multi-agent systems.

Kill-Switch Mechanisms: Hardcoded thresholds where an agent must immediately cease operations and escalate to a human supervisor if it encounters an “out-of-distribution” scenario. These include hard limits on transaction amounts, counterparty types, and access to PII (Personally Identifiable Information).

As of early 2026, banks that are experienced with agentic AI governance have moved away from “static” risk assessments toward dynamic risk profiling, where the governance system adjusts an agent’s permissions in real-time based on market volatility or detected anomalies. This is a completely different approach that many bank risk departments must get used to and start to train on.

10 Agentic Use Cases for Modern Banking

We are now at the point where a business line banker can create a lead agent in plain English and that agent can spawn and manage “Sub-Agents” to handle a specific task. Here are currently the most common use cases for bank agent teams:

- Client Pre-Meeting Dossiers: Scours LinkedIn, SEC filings, and local news to create a “cheat sheet” for Relationship Managers.

- Transaction Exception Processing: Identifies payments, checks historical patterns, and drafts a resolution for human approval.

- Regulatory Monitoring: Monitors the Federal Register and state updates, flagging items specific to the bank’s asset size and risk profile. When an item is found, a risk plan is automatically generated.

- Loan Document Review: Checks for loan document package completeness making sure all documents are executed, in the proper name, with the proper address and all non-standard language is highlighted. Produces an audit log.

- Loan Document Synthesis: Processes 500+ pages of environmental/title reports, highlighting only clauses that deviate from standard risk appetite.

- Sentiment & Social Listening: Monitors local community groups and social media for sentiment to help with deposit marketing and reputational management.

- Stablecoin & Digital Asset Monitoring: Tracks L1/L2 gas fees and liquidity pools for banks issuing tokenized deposits.

- Vendor Due Diligence: Automatically pings vendors for updated documents (financials, SOC2 reports, etc.) and flags expiring certificates. For new vendors, they collect and extracts information, completes vendor management form, emails vendor with outstanding questions and records responses.

- Synthetic Data for Risk Models: Generates anonymized datasets to stress-test investment, loan, and deposit portfolios against five standard deviation shocks.

- The IT “Sentry”: A local agent that monitors server logs and automatically isolates suspicious processes.

Putting This Into Action

Managing agent teams and swarms utilizing a lead agent is the next AI trend to hit banks that is transformative in nature. The smaller a bank is the more they can benefit from leveraging agent teams as a workforce multiplier. We have seen credit, marketing, IT, and operational examples where one person can manage 100+ agents and do the work of 20+ humans. The ability to “vibe code” a single, lead agent and let the agent build and manage other agents is a new change that bankers must have a working knowledge of to be successful in the long run. As for the next steps, bankers should consider:

- Prioritize Data Fluency: Ensure your data is “agent-readable.” Siloed, locked PDFs are the enemy of automation. Begin collecting data and documents in as few locations as possible, using consistent titling structures.

- Establish Agent Governance: Add agentic AI to your governance policy and start to define risk parameters and priority use cases to crawl-walk-run. Define who can “spin up” an agent and what high-stakes actions require a physical “human-in-the-loop” thumbprint.

- Hiring and Training: Include AI and agentic talent assessment when hiring and invest in training bank employees on the use of agentic teams. At a minimum, teach bankers the “art of the possible.”

- Encourage Experimentation: Let your staff explore Codex and Claude in a safe and sane manner. The barrier to entry has never been lower and there are plenty of low-risk use cases such as client or vendor research of public information that can save bankers time and present a low-risk use case in which to learn to manage teams of agents.

Banks can leverage agentic AI to revolutionize their operations, from sentiment monitoring and vendor management to risk modeling and IT security. Emphasizing the importance of AI fluency, robust governance, and ongoing experimentation, bankers are encouraged to adopt a proactive approach to integrating agent teams. By doing so, even smaller institutions can multiply their workforce and maintain a competitive edge in the rapidly evolving financial landscape.